Billions of agents, one internet: Separating trust from threat

Copy linkGood Bots vs. Bad Bots

Billions of agents will be accessing the internet by 2030 representing both legitimate and malicious intent. On one hand, hundreds of enterprises use Browserbase to automate existing human tasks that would have been impossible a few years ago. On the other hand, there are thousands of bad actors using AI and “bad bots” to commit fraud and violate copyright laws.

The simple fact is that we have already opened pandora’s box. AI agents are an entity that operate on behalf of enterprises and individuals to perform tasks that would otherwise be performed by humans. This might be searching for information or automating compliance for financial institutions.

If the internet is going to remain open, then agents are going to need to self identify. However, they are only going to do this if they aren’t going to be indiscriminately harmed for doing so. Browserbase, Perplexity and Cloudflare all want to prevent bot farms, hackers from North Korea and flagrant copyright abuse from destroying the internet. To do that, the trust model needs to change.

Copy linkThe Problem

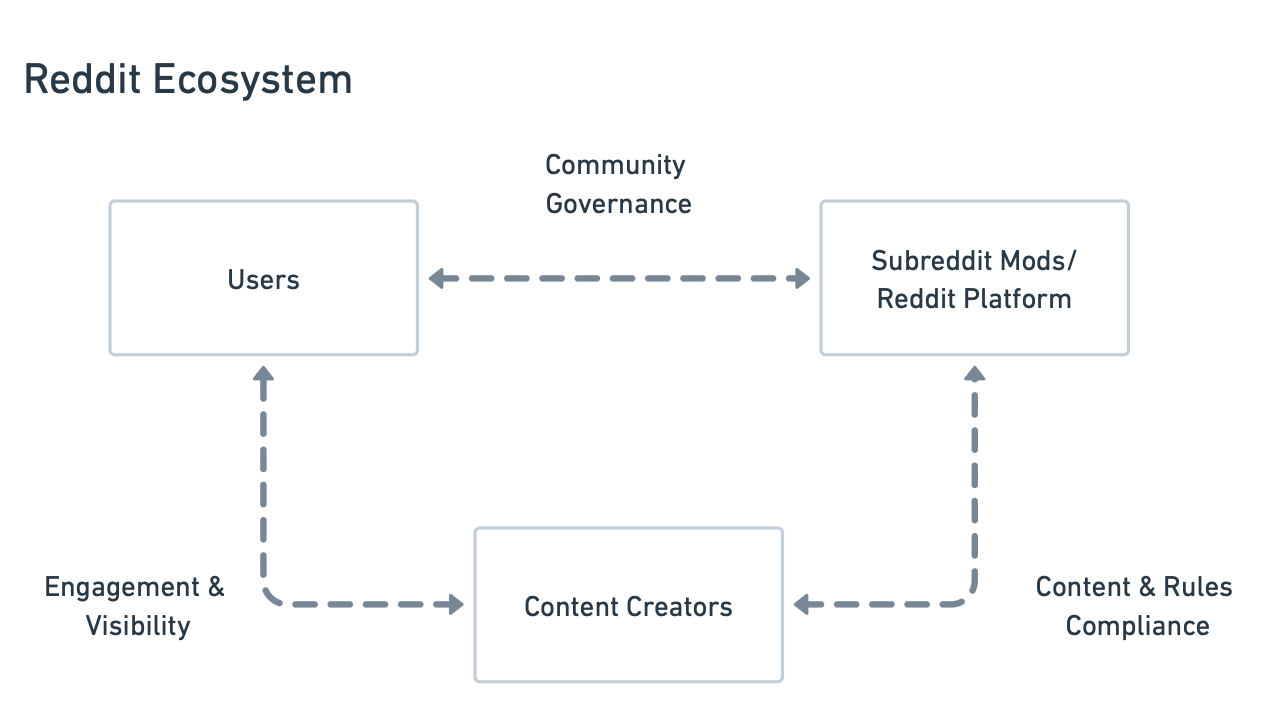

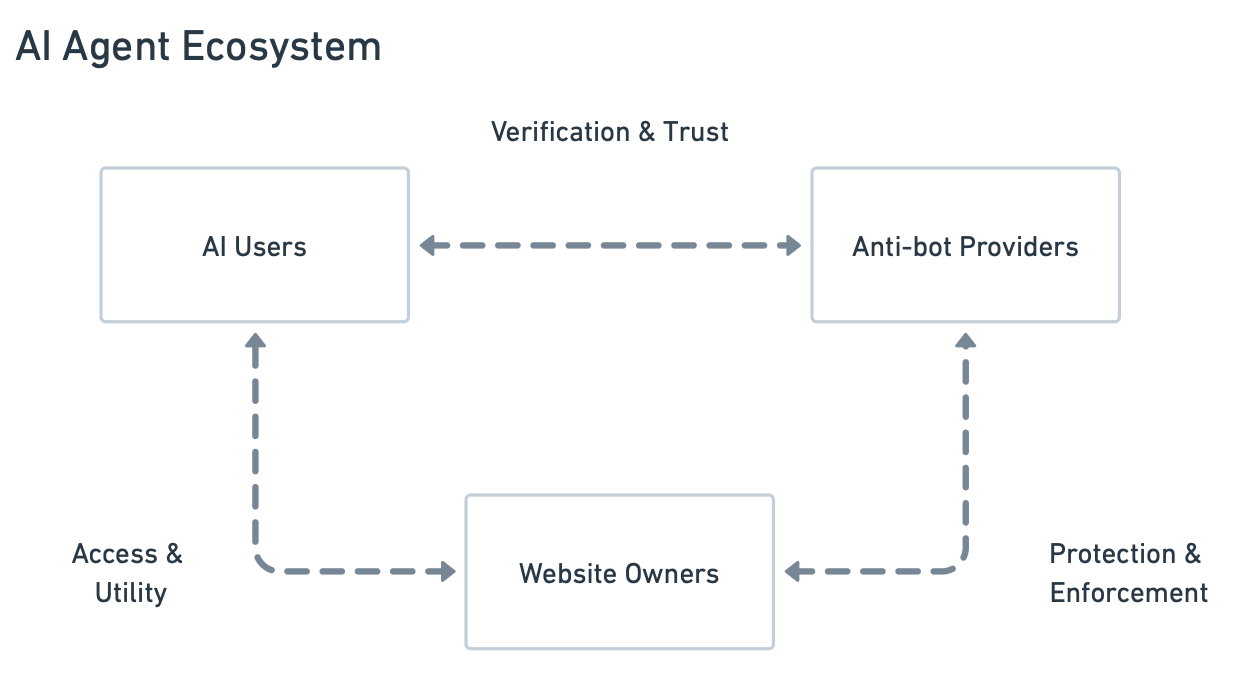

In the past, the relationship between users and anti-bot providers was transparent. But now, the web is changing, as individuals are delegating their tasks to AI agents that use the web to work on their behalf. Agent users, anti-bot providers and website owners need to partner together to identify and enable legitimate use cases while punishing abuse. We need to look no further than the copyright challenges presented by Reddit for an example of how this could work.

In the relationship structure above, all parties are incentivized to work together to make Reddit safe as it emerged as a common good. If we overlay this relationship model onto the AI agent discourse, we’ll see that a similar system emerges.

Copy linkThe Future

Enabling agent providers (Browserbase, Perplexity), Anti-bot companies (Cloudflare, Google reCAPTCHA) and website owners to work hand in hand is not a simple task. However, it is absolutely critical for providing equal opportunities to all AI agent developers with a trust first model that once abused, restricts access.

The open question, is how we can enable agent authorization across the internet. Agents should be trusted but monitored for abuse and malicious activity. If detected, they should automatically be restricted with additional mechanisms for website owners to notify and restrict agents owners on their own. This builds on top of a strong identity model and allows all parties to bring their own tools to the table.

At Browserbase, we are tackling this problem head on and have already engaged in partnership discussions with leading anti-bot providers and authentication provider like Stytch.

Meanwhile, we are tracking proposals advancing through IETF to enable agent identity via Web Bot Auth with multiple companies like Cloudflare and OpenAI actively implementing at scale.

Browserbase is the best-in-class browser infrastructure for AI with good intent to incorporate the web into their workflows.

We seek to work with website owners, anti-bot companies and agent developers to ensure malicious agents get blocked and legitimate agents are able to access the internet freely.

If you want to partner with Browserbase, we’d love to hear from you. Email us at trust@browserbase.com